AI stumbles with fear because it over-simplifies. It leans on facial expressions but misses the vital backstory. Fear isn't just about terrorized eyes; it's wrapped in cultural quirks and context. AI's one-size-fits-all view neglects emotional nuance. Datasets are biased, and emotions are caricatured. The complexities of fear elude AI, leading to errors and ethical quandaries. Want the gritty details of why AI just doesn't get fear? It's all about what lies beneath, context over expression.

Key Takeaways

- AI struggles with cultural differences, failing to interpret diverse expressions of fear beyond facial expressions.

- Contextual nuances elude AI, leading to inaccurate fear recognition based solely on facial cues.

- AI's reliance on biased datasets limits its understanding of fear's complexity.

- Emotional masking and sarcasm hinder AI's ability to accurately discern fear.

- AI lacks the ability to incorporate situational background, crucial for accurate emotional interpretation.

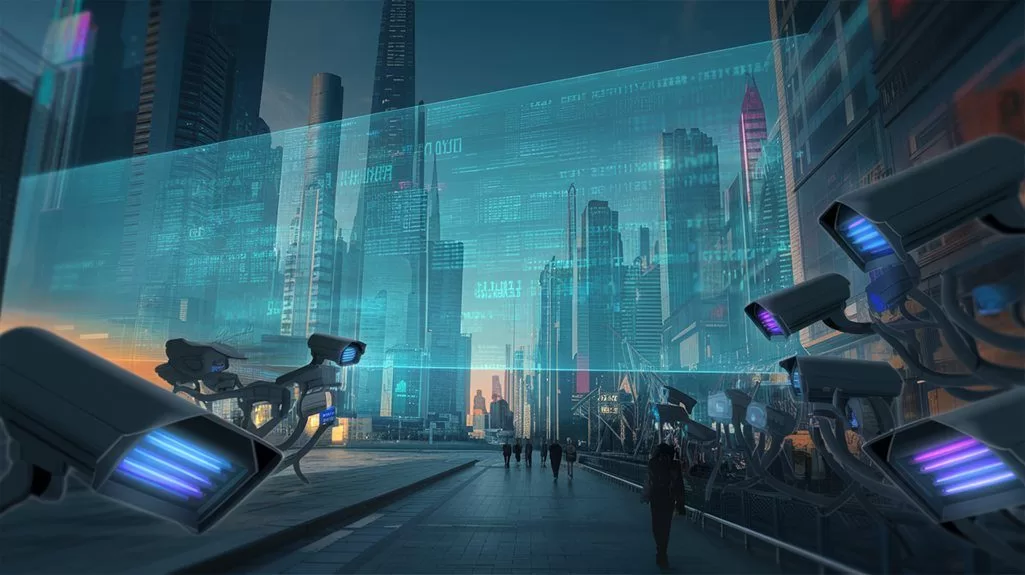

In the domain of artificial intelligence, fear is a tricky customer. AI, in its quest to understand human emotions, often reduces fear to mere shadows of its true self. Simplification, its favorite tool, fails to capture the rich tapestry of emotional nuances inherent in fear. The systems, trained on datasets brimming with stereotypical expressions, miss out on the kaleidoscope of cultural expressions that define fear across the globe. Such oversights are not just blunders—they're recipe for disaster. Additionally, the reliance on facial recognition technology raises privacy risks associated with improper data storage and potential misuse of biometric information.

Consider the intricacies of fear's expression. One culture may widen eyes, another may narrow them. AI, with its one-size-fits-all approach, trips over these variations like a clumsy dancer at a cultural festival. It's as if AI attended a masquerade ball with a blindfold on, fumbling to recognize faces it should know. The reliance on facial expressions alone is like trying to paint a rainbow with just two colors. It's insufficient. Emotional masking and sarcasm further muddy the waters, leaving AI scratching its metaphorical head.

Context, the invisible hand that guides emotional expression, eludes AI. Without it, fear becomes a puzzle missing half its pieces. AI often finds itself in a quagmire, unable to navigate the subtleties of a terrified whisper or a nervous laugh. It's like trying to read a novel by skimming only the bold text. Context is king, and AI is just a court jester, prancing around without understanding the script. AI systems often rely on caricatured representations of complex human emotions, which leads to misinterpretation and oversimplification. The importance of contextual factors, such as situational background and upbringing, greatly influences emotional behavior, and AI's current limitations make it challenging to fully incorporate these nuances.

Biases and inaccuracies plague AI's emotional recognition like a bad rash. Human bias seeps into datasets, coloring AI's perceptions with shades of error. The datasets, often void of edge cases, provide a skewed lens through which fear is viewed. The irony? AI, meant to be impartial, ends up mirroring our own prejudices.

Biometric data, with its promise of deeper insight, sits in the shadows. AI's fixation on facial cues neglects the symphony of physiological signals that could illuminate fear's true essence. It's akin to ignoring the orchestra while focusing solely on the conductor's baton.

AI's limitations are not just technical—they're cultural and ethical minefields. Social norms, historical contexts, and privacy concerns add layers of complexity. The notion of AI dissecting fear, in all its raw and vulnerable glory, raises eyebrows and ethical red flags. And yet, here we are, entrusting these silicon soothsayers with tasks they are woefully unequipped to handle.

References

- https://www.nyu.edu/about/news-publications/news/2023/december/alexa–am-i-happy–how-ai-emotion-recognition-falls-short.html

- https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.1270.pdf

- https://builtin.com/artificial-intelligence/emotion-ai

- https://substack.com/home/post/p-157684961

- https://www.accessnow.org/wp-content/uploads/2022/05/Prohibit-emotion-recognition-in-the-Artificial-Intelligence-Act.pdf