AI training often tiptoes on the line of legality. Privacy laws demand airtight data practices. EU AI Act updates? Yep, a relentless chase. Intellectual property rights get murky, downright shady, with AI outputs. Liability for AI errors? A lawsuit waiting to happen. Don't get us started on data security blunders. Explainability and accountability? Missing in action. Tech world's balancing act between legal compliance and innovation is dizzying, but dig deeper to unravel this tangled web.

Key Takeaways

- AI training often involves using personal data, necessitating strict compliance with data privacy laws to avoid legal issues.

- Outdated privacy policies require careful review to align with current legal requirements in AI development.

- Bias in AI training data can lead to discriminatory outputs, posing both ethical and legal challenges.

- Intellectual property rights over AI-generated content are unclear, increasing the risk of copyright infringement.

- Lack of explainability in AI decision-making can hinder accountability and complicate regulatory compliance.

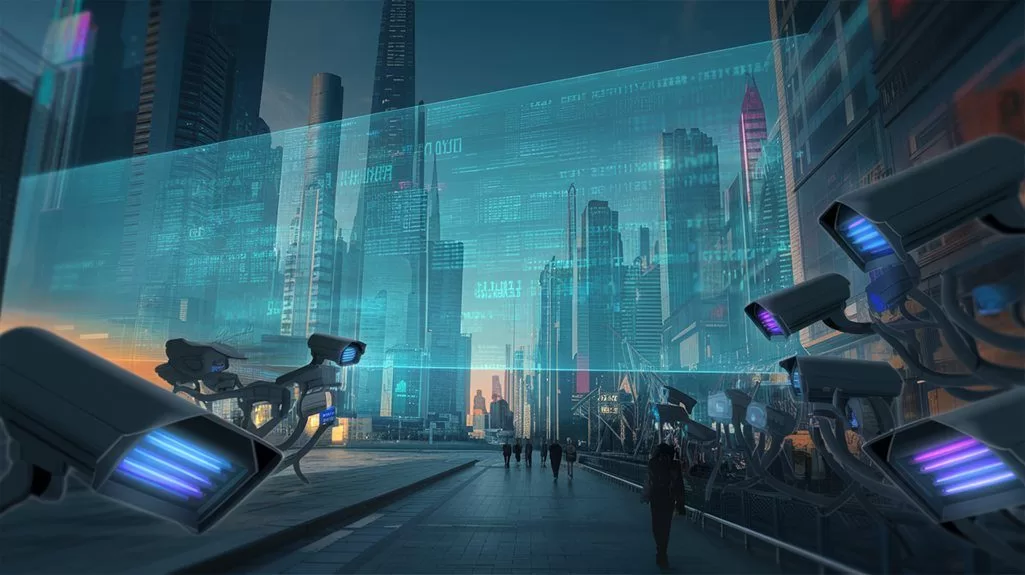

How on earth did AI training become such a legal minefield? It's a question that baffles many. AI development, once a frontier of limitless possibilities, now seems tangled in a web of legal compliance and ethical standards. But let's break it down. At its core, AI training relies heavily on massive datasets. These datasets often contain personally identifiable information, raising data privacy issues. Legal compliance demands strict adherence to privacy laws, which, let's be honest, are about as clear as mud sometimes. And yet, it's non-negotiable.

Using historic data? Better check those dusty old privacy policies. Misuse could lead to a legal headache no one wants. Companies must navigate the murky waters of updating terms to reflect new uses. Not exactly a walk in the park. Concerns about the storage and transmission of facial recognition data further complicate these issues, as data breaches pose significant risks.

Navigating outdated privacy policies is a legal minefield no one wants to traverse.

And then there's the EU AI Act, looming over AI developers like a regulatory specter, demanding adherence to its evolving stipulations. Compliance is critical, but keeping up feels like chasing a moving target. Evolving client expectations demand efficiency and transparency, which in turn drives the need for ethical considerations in AI training.

Bias in training data. Another landmine. AI models can unwittingly inherit biases, leading to outputs that are, frankly, discriminatory. It's a legal risk that demands vigilance. Yet, ensuring transparency in these complex models is a Herculean task. Lack of transparency can complicate decision-making and erode trust, like a slow leak in a tire. The potential for bias also underscores the importance of adherence to ethical standards, as responsible AI usage in law must prioritize transparency and accountability.

On the legal front, intellectual property disputes are the new battleground. AI-generated content often blurs the lines, and copyright infringement cases are just waiting to happen. Liability for inaccurate AI outputs is another ticking time bomb. Users need to verify the accuracy, but who's got time for that?

Data security concerns bring another layer of complexity. Exposing sensitive data can lead to severe consequences, especially in regulated industries. Compliance with ethical standards isn't just a box to check. It's a growing concern that demands AI models operate within ethical frameworks and respect digital rights.

The lack of explainability in AI decision-making is, quite bluntly, a problem. It hinders legal and ethical accountability. Regulators must balance innovation with consumer protection. Not an easy task. Harmonized standards, anyone? They could facilitate efficient AI development, but the road to international consensus is paved with challenges.

References

- https://www.americanbar.org/groups/law_practice/resources/law-technology-today/2024/legal-innovation-and-ai-risks-and-opportunities/

- https://www.datacamp.com/blog/ai-in-law

- https://www.sheppardmullin.com/media/publication/2186_Law360_-_Legal_Issues_When_Training_AI_On_Previously_Collected_Data.pdf

- https://termly.io/resources/articles/is-ai-model-training-compliant-with-data-privacy-laws/

- https://www.clio.com/resources/ai-for-lawyers/ai-legal-issues/