Embodied AI wades into murky waters. It's fueled by innovation yet shackled to surveillance. Privacy? A joke, with data collected left and right without a 'May I?'. Bias? As if crafted to exclude anyone who isn't a data scientist. Algorithms may as well be speaking in hex, they're so opaque. But, am I wrong? Healthcare's hope or menace, its ethical implications seem endless. A playground of potential, but who pays the price? Curious for more?

Key Takeaways

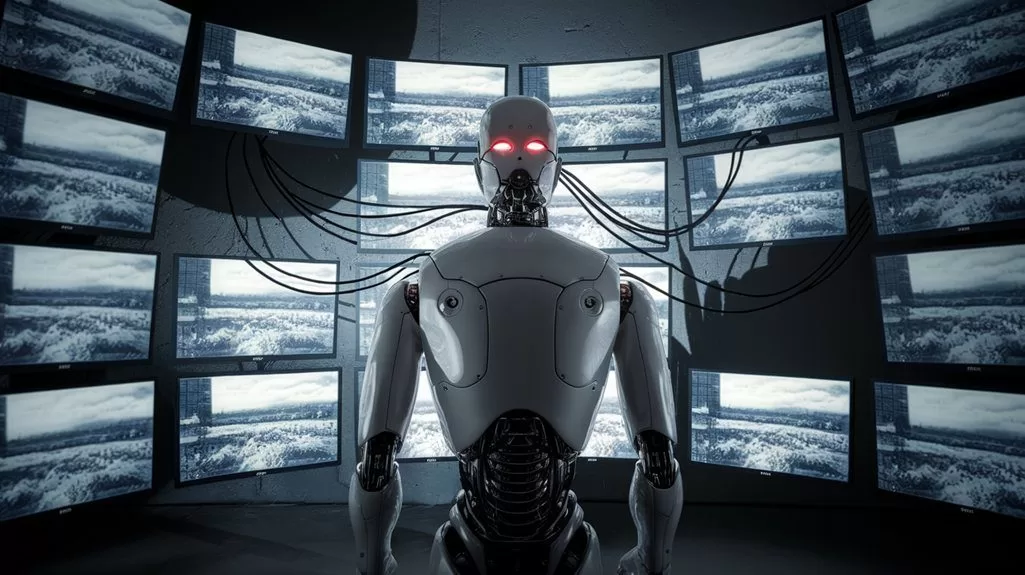

- Embodied AI innovation often relies on extensive surveillance, raising significant data privacy concerns.

- Lack of explicit consent for data use in AI systems questions the ethical acceptability of such innovations.

- Algorithmic biases in AI reflect creators' prejudices, risking reinforcement of societal stereotypes.

- Complex AI algorithms challenge transparency and accountability, complicating ethical oversight.

- Surveillance-driven AI can marginalize individuals with disabilities, perpetuating inequality and technoableism.

How exactly do we navigate the murky waters of embodied AI's ethical challenges? The landscape is fraught with potential pitfalls, especially when considering data privacy and the ethical implications of these systems.

Embodied AI, designed to mimic human-like interactions, often falls prey to algorithmic biases. These biases aren't just theoretical—they're real and pervasive, reflecting the prejudices of their mainly WEIRD creators. The result? A reinforcing of stereotypes and cultural homogeneity that can exclude marginalized groups from opportunities and services. The prevalence of algorithm bias in facial recognition technologies underlines the urgent need for regulation and ethical oversight.

Data privacy is a cornerstone issue here. The extensive data collection practices of embodied AI systems raise significant privacy concerns. Often, data is collected and used for secondary purposes without explicit consent, a practice that dances perilously close to the edge of ethical acceptability. Bias in AI systems can lead to discriminatory outcomes, as seen in facial and voice recognition technologies that show bias against individuals with darker skin tones.

While regulations like the EU's AI Act attempt to address these issues, the reality is that current legal frameworks are playing catch-up with the breakneck speed of technological advancement. Yes, the Privacy Act of 1974 offers some protection, but let's be real—it's a bit like using a flip phone in a smartphone world.

The ethical implications extend further when considering how embodied AI can serve as gatekeeping mechanisms. By embedding biases within algorithms, these systems inadvertently exclude certain groups, perpetuating inequalities rather than solving them.

And let's not forget about the principle of technoableism. AI systems, by their very design, often marginalize individuals with disabilities, despite the lofty claims of inclusivity and accessibility.

Transparency and accountability are essential yet elusive goals in this domain. The demand for explicability in AI decision-making is growing, but achieving it remains a challenge. It's all well and good to say that decisions should be explainable, but when the algorithms themselves are shrouded in complexity, how transparent can they really be?

The call for digital governance is louder than ever, but the path to effective oversight is littered with obstacles.

In the healthcare sector, the integration of embodied AI poses unique challenges. On one hand, these systems offer innovative mental health applications. On the other, they risk infringing on patient autonomy and data ethics, especially when regulatory frameworks are murky at best.

The potential for harm in clinically sensitive areas cannot be ignored.