Linear Regression, Logistic Regression, Random Forest, Support Vector Machines (SVM), and Naive Bayes are the most effective machine learning algorithms for data analysis, covering both continuous outcomes and binary classification tasks. These algorithms are fundamental in various fields, including healthcare, marketing, and finance, due to their simplicity and accuracy. Random Forest reduces overfitting, while SVM excels in high-dimensional data. Naive Bayes handles independent features, and logistic regression elegantly handles classification tasks. To navigate these truths of machine learning and uncover more advanced techniques, continue on this path.

Key Takeaways

- Linear Regression, Logistic Regression, Random Forest, Support Vector Machines (SVM), and Naive Bayes are widely used in predictive modeling applications for continuous outcomes and binary classification tasks.

- Techniques like Principal Component Analysis (PCA) and t-distributed Stochastic Neighbor Embedding (t-SNE) are used for data dimension reduction and visualization.

- SVMs excel in high-dimensional data classification by finding the best hyperplanes, while Naive Bayes handles independent features in high-dimensional datasets.

- For pattern recognition and clustering, algorithms like K-means and DBSCAN are used to identify similarities and anomalies in large datasets.

- Neural networks adapt to complex patterns and relationships in data and are particularly effective in image and video data analysis with Convolutional Neural Networks (CNNs).

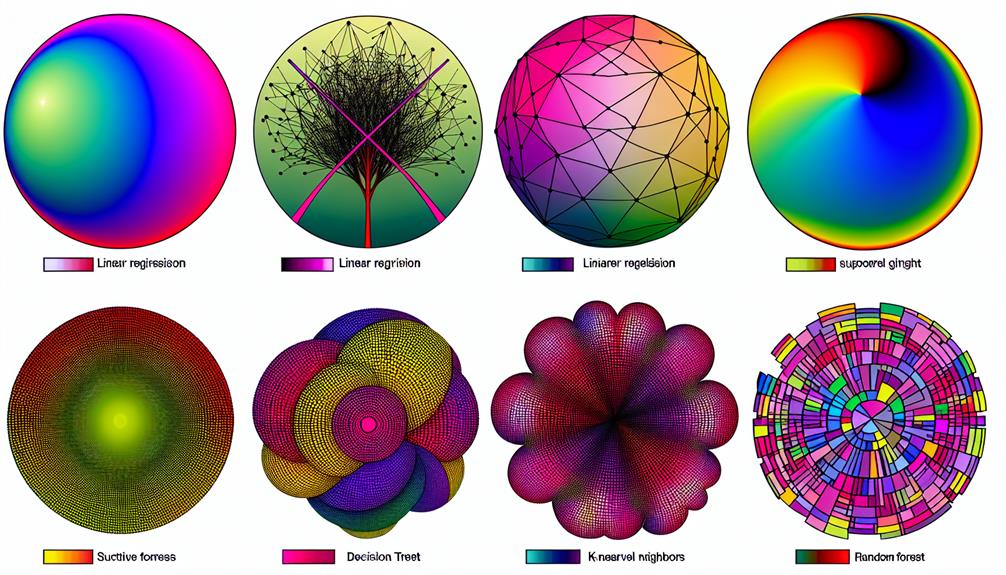

Most Used ML Algorithms

The most widely used machine learning algorithms for data analysis are linear regression, Logistic Regression, Random Forest, Support Vector Machines (SVM), and Naive Bayes. Each offers unique strengths in various predictive modeling applications.

These algorithms have been extensively employed across various domains, from predicting continuous outcomes to binary classification tasks.

Linear Regression and Logistic Regression are staples in the machine learning toolkit. Linear regression, being one of the simplest yet most effective algorithms, is ideal for predicting continuous outcomes, whereas logistic regression excels in binary classification tasks, providing probabilities for predictions. These algorithms are widely used in healthcare, marketing, and finance for their simplicity and accuracy.

Random Forest and SVM are also commonly used machine learning models. Random Forest, as an ensemble learning method, effectively reduces overfitting and handles large datasets with high dimensionality, ensuring robust predictions. SVMs excel in high-dimensional data by finding the best hyperplanes for classification tasks.

Naive Bayes, a simple yet powerful algorithm, handles both categorical and numerical features, making it an essential tool in machine learning.

Techniques for High-Dimensional Data

In the world of high-dimensional data, several advanced techniques are crucial in harnessing the intricacies of complex datasets. Here, we will explore methodologies that aid in taming the dimensions, notably including data dimension reduction and classification techniques to guarantee efficient data analysis.

These techniques are specifically designed to handle high dimensionality, making it possible to uncover meaningful patterns and relationships within the data.

Data Dimension Reduction

When dealing with high-dimensional datasets, dimensionality reduction techniques such as Principal Component Analysis (PCA) and t-distributed Stochastic Neighbor Embedding (t-SNE) become essential for reducing the number of features while preserving essential information. These techniques are critical for handling large datasets, improving computational efficiency, and aiding in data visualization.

Principal Component Analysis (PCA) is a common technique for reducing the dimensionality of high-dimensional data by transforming variables into linearly uncorrelated components. It helps in identifying patterns and relationships in data while preserving the most important information in a lower-dimensional space. PCA is a linear technique that is particularly useful when the data exhibits a linear structure.

Another dimension reduction technique is t-SNE, which effectively visualizes high-dimensional data in a lower-dimensional space. Unlike PCA, t-SNE is a nonlinear technique that focuses on preserving pairwise similarities between data points in a lower-dimensional space, making it well-suited for complex data visualization.

Classification Techniques

Handling high-dimensional data necessitates the use of algorithms that are specifically designed to handle the complexities inherent in such datasets; hence, classification techniques tailored for high-dimensional data become indispensable. These algorithms are particularly adept at addressing the issues that arise from the ‘curse of dimensionality’ and are essential for effective data analysis.

Main Classification Techniques for High-Dimensional Data:

- Support Vector Machines (SVM): SVMs are particularly effective in high-dimensional data, leveraging the best hyperplanes for classification purposes.

- Naive Bayes: Naive Bayes classifiers are well-suited for high-dimensional datasets due to their ability to handle independent features. This property makes them valuable for tasks where data has numerous features with limited sample sizes.

- K-Nearest Neighbors (KNN): KNN is highly sensitive to the local structure of high-dimensional data, making it particularly suitable for pattern recognition tasks.

- Random Forest: Random Forests are known for their ability to manage large datasets with high dimensionality, providing feature importance ranking for both classification and regression tasks.

These algorithms differ from other classification techniques in their specific design to alleviate the challenges associated with high-dimensional data.

Handling High Dimensionality

High-dimensional datasets, characterized by a large number of features relative to the number of samples, require targeted strategies to ensure meaningful insights. The primary challenge in high-dimensional data analysis is the curse of dimensionality, which negatively affects model performance and computational efficiency.

Various techniques, each with strengths and weaknesses, are employed to handle such data effectively. Principal Component Analysis (PCA) is a popular linear dimensionality reduction technique, transforming high-dimensional data into a lower-dimensional space while preserving important information.

Another technique is t-SNE (t-distributed Stochastic Neighbor Embedding), a non-linear dimensionality reduction method that effectively preserves local structures. Linear Discriminant Analysis (LDA) is also used for dimensionality reduction, particularly in multi-class classification problems, as it seeks to maximize the separation between classes.

These techniques, along with feature selection, feature engineering, and regularization, help mitigate the issues associated with high-dimensional data. By addressing high dimensionality, machine learning models can be made more interpretable, less prone to overfitting, and more accurate in their predictions.

Effective handling of high-dimensional data is a vital step towards achieving meaningful insights and best performance in data analysis.

Effective Advancements in Neural Networks

Deep learning advancements in neural networks have greatly improved performance across various machine learning tasks by leveraging the strengths of artificial neurons that simulate the biological thought process. This mimicking of brain structure allows neural networks to excel in capturing complex patterns and relationships in data, making them a crucial choice for image recognition and natural language processing tasks.

Key highlights of these advancements include:

- Neural Architecture Flexibility: Neural networks can adapt to multiple layers and interconnected nodes, mimicking the complexity of human brain structures.

- Convolutional Neural Networks (CNN): CNNs are specifically designed to handle image and video data, leveraging sliding filters to identify important features.

- Deep Architecture Requirements: Neural networks require substantial computational resources for training due to their deep architecture and large datasets.

- Performance Improvements: Advances in neural networks have remarkably enhanced performance across various machine learning tasks, making them invaluable tools in data analysis.

Clustering and Pattern Recognition

In the field of unsupervised machine learning, clustering algorithms like K-means and DBSCAN elegantly group data points based on similarities, thereby facilitating customer segmentation, anomaly detection, and the discovery of natural structures in data.

Additionally, the utility of these algorithms extends to various fields, such as image processing and recommendation systems.

Clustering Applications

Clustering techniques, including K-means and DBSCAN, play an essential role in recognizing patterns and identifying anomalies within large datasets. These methods, rooted in unsupervised learning, enable businesses to uncover hidden structures within their data, facilitate customer segmentation, and detect anomalies effectively.

Key applications of clustering algorithms include:

- Customer Segmentation: Clustering helps categorize customers into distinct groups based on their preferences, behaviors, and other relevant characteristics.

- Anomaly Detection: By identifying clusters, businesses can pinpoint unusual patterns, indicating potential issues or opportunities.

- Data Visualization: Clustering simplifies visualization and organization of large datasets, revealing insight into the relationships between data points.

- Data Quality Monitoring: Clustering algorithms, such as DBSCAN, can be used to detect and handle noise and outliers in datasets, ensuring data accuracy.

These algorithms are particularly useful in handling real-world datasets that often contain irregularities, noise, and outliers. The correct choice of algorithm depends on the nature of the data and the desired outcomes, emphasizing the importance of understanding the strengths and limitations of each clustering technique.

Pattern Recognition Techniques

Pattern recognition techniques, including clustering methods like K-means and DBSCAN, provide valuable tools for identifying structures and relationships within data, thereby enhancing insights, decision-making, and anomaly detection capabilities. These techniques are essential in pattern recognition as they help in identifying clusters of similar data points, enabling the detection of anomalies and the segregation of data into meaningful groups.

Pattern Recognition Techniques: Comparative Overview

| Technique | Key Characteristics |

|---|---|

| K-means Clustering | Simple, effective, sensitive to number of clusters (K) |

| DBSCAN Clustering | Robust to outliers, high overhead for large datasets |

| Support Vector Machines | Effective in high-dimensional spaces, used for classification |

Various methods, such as statistical analysis, machine learning algorithms, and data mining, can recognize patterns in data. This process is vital because it aids in making predictions, classifying data, and identifying outliers. Feature extraction, pattern classification, and post-processing are key stages involved in the process. The ability to identify patterns and cluster data points correctly enhances the efficiency of machine learning models and informs better decision-making processes.

Predictive Modeling Techniques

Incorporating statistical concepts into machine learning workflows, predictive modeling techniques unlock the potential to draw actionable insights from data, pushing decision-making forward in various industries.

Here are some essential algorithms that excel in this domain:

- Linear Regression: Linear regression is a fundamental algorithm for predictive modeling. It is widespread due to its simplicity and effectiveness in establishing relationships between variables. Linear regression is commonly used for predicting continuous outcomes, making it a foundational step in many data analysis pipelines.

- Logistic Regression: Logistic regression is specifically designed for binary classification tasks, providing probabilities for different outcomes. This algorithm is extensively used in healthcare and marketing, where binary categorizations are crucial.

- Decision Trees: Decision trees make decisions based on splits in the data, offering fast learning and interpretation of results. This algorithm is effective for predictive modeling tasks, particularly in handling categorical data and non-linear relationships.

- Random Forest: An ensemble learning method, random forest uses decision trees to reduce overfitting and handle large datasets. This algorithm provides significant insights into feature importance for predictions, making it a powerful tool in predictive modeling.

These algorithms collectively form the backbone of predictive modeling, ensuring that data-driven decisions are made effectively and efficiently across various sectors.

Recommendation Systems and Analysis

Recommendation systems leverage machine learning and collaborative filtering approaches. They aim to provide personalized suggestions by identifying interdependencies between users and items.

Collaborative filtering techniques uncover these interdependencies by aggregating preferences from multiple users. By analyzing these patterns, systems can generate data-driven recommendations tailored to individual tastes and preferences.

Collaborative Filtering

Collaborative filtering is a type of recommendation system that leverages the historical interactions between users and items to personalize recommendations. This approach analyzes user-item interactions, enhancing the user experience and engagement by providing relevant suggestions based on the preferences and behaviors of similar users. Collaborative filtering can be separated into two primary categories: user-based and item-based. Both of these categories rely on the principle that users who shared similar interests and patterns in the past will likely continue to do so in the future.

Here are the key benefits of collaborative filtering:

- Improved User Experience: Personalized recommendations increase user satisfaction and encourage continued engagement.

- Enhanced Relevance: Goods and services tailored to individual preferences boost relevance and conversion rates.

- Scalability: Collaborative filtering can handle large datasets, making it suitable for extensive e-commerce and streaming platforms.

- Accuracy: The accuracy of these systems depends on the quantity and quality of user-item interactions, ensuring reliable recommendations.

Machine Learning Approach

Machine learning algorithms like probabilistic matrix factorization and alternating least squares enable accurate predictive modeling in recommendation systems, enhancing user experience through highly personalized suggestions. In these systems, machine learning plays an essential role in condensing the complex dimensions of user preferences into actionable insights.

This process involves leveraging machine learning techniques such as clustering, which ensures that similar users and items are effectively grouped, and dimensionality reduction, which distills the most relevant features from large datasets to improve the effectiveness of recommendations.

Moreover, these algorithms operate in conjunction with specific clustering techniques that configure these models for peak performance. The fusion of clustering and dimensionality reduction algorithms streamlines the recommendation process by reducing the number of features under consideration and optimizing factors well-suited for predictive modeling.

Leveraging these methods, businesses can swiftly tease apart valuable patterns and connections in massive data sets, ultimately driving business growth and customer satisfaction. By exploiting the full potential of machine learning’s predictive prowess, companies can refine accurate, scalable, and customizable models that pinpoint customer needs and relieve decision-making burdens.

From streamlining data analysis to delivering tailored experiences, machine learning’s unique capabilities seamlessly facilitate progress in a wide range of industries. They are gradually reshaping the way that organizations interact with their customers.

Data-Driven Recommendations

Data-driven recommendation algorithms thrive on the granular analysis of user behavior and preferences. They leverage such insights to deliver finely tailored content and enhance overall user satisfaction. By continuously incorporating patterns from past interactions and product attributes, these systems ensure that suggestions are both relevant and compelling.

The use of machine learning and artificial intelligence enables these systems to adapt and improve over time, providing users with the content and products they are most likely to engage with.

Data-driven recommendation algorithms utilize several approaches:

- Collaborative filtering: This method relies on a shared user pool to predict preferences based on others with similar tastes.

- Content-based filtering: Analysis of the parameters and characteristics of items themselves drives this technique.

- Hybrid models: A blending of both collaborative and content-based filtering for more accurate suggestions.

- Advanced ML and AI methods: Incorporation of machine learning and AI to continually improve and adapt the recommendations.

The power of data-driven recommendations lies in their ability to cultivate trust and foster tracking between users and the recommendation systems. This trust, driven by the delivery of personalized and conscious content, significantly boosts user engagement and conversion rates.

Supervised Learning Algorithm Options

Choosing the right supervised learning algorithm is essential for the best predictive modeling. In supervised learning, the goal is to predict a target variable based on input variables. There are two primary types of supervised learning: classification and regression. Classification algorithms predict categorical values, while regression algorithms predict continuous values.

Key supervised learning algorithms include linear regression, Logistic Regression, and Support Vector Machines. Linear Regression is ideal for predicting continuous outcomes and establishing regression relationships, while Logistic Regression is effective for estimating discrete values and binary classification tasks.

Decision Trees provide easily interpretable decision rules for predicting target variable values. Support Vector Machines are powerful in high-dimensional spaces and maximize margins between classes. Each algorithm has its strengths and weaknesses, making the choice dependent on the specific nature of the dataset.

Regression Methods for Continuous Data

When analyzing continuous data, regression methods serve as a cornerstone for predicting outcomes and uncovering relationships between variables.

- Linear Regression: This traditional approach reliably handles continuous outcomes like sales revenue or temperature. It calculates the mean change in the dependent variable for every one-unit change in each independent variable, making it effective for understanding variable interactions.

- Regularized Regression: Techniques like Lasso and Ridge regression provide remedies for multicollinearity by introducing bias to reduce large variances, leading to more useful coefficient estimates and robust models.

- Polynomial Regression: By capturing non-linear relationships, this method enhances predictability in data analysis. It is particularly useful for modeling complex patterns where simple linear regression falls short.

- Real-World Applications: Regression methods are extensively used in finance, economics, and marketing for forecasting purposes due to their ability to provide actionable insights and help stakeholders optimize future strategies.

Classification and Binary Outcomes

Binary classification methods, such as logistic regression, support vector machines, naive Bayes, k-nearest neighbors, and random forests, are enhanced for predicting discrete outcomes using continuous or categorical feature data, a fundamental aspect of data analysis in various domains.

Comparison of Binary Classification Algorithms

| Algorithm | Key Features |

|---|---|

| Logistic Regression | Predicts probabilities | Suitable for linear and nonlinear relationships |

| Support Vector Machines | Finds best hyperplane | Handles high dimensional data effectively |

| Naive Bayes | Assumes independence | Performs well with small datasets |

| K-Nearest Neighbors | Based on distance metrics | Nonparametric, simple to implement |

| Random Forests | Combines decision trees | Improves accuracy and reduces overfitting |

These algorithms are widely used across industries for tasks such as medical diagnosis, quality control, and information retrieval. Logistic regression provides high interpretability, while SVMs handle complex nonlinear relationships. Naive Bayes excels with small datasets, and KNN is simple yet effective. Random forests offer robustness by combining decision trees.

Implementing Boosting and Bagging

By leveraging the predictive abilities of multiple models, both boosting and bagging, techniques enhance the overall accuracy of classification tasks, offering robustness and increased precision in a wide range of data analysis scenarios. Ensemble learning is a fundamental concept behind these methods, where multiple ‘weak’ models are combined to create a ‘strong’ model.

Boosting algorithms like AdaBoost and Gradient Boosting are particularly effective. They focus on correcting errors by giving more weight to misclassified instances. This sequential learning approach guarantees that subsequent models address the weaknesses of previous models, ultimately leading to better accuracy.

- AdaBoost and Gradient Boosting: Popular boosting algorithms for high-precision classification.

- Bootstrap Sampling: Bagging involves creating multiple subsets of the original dataset using random sampling with replacement.

- Independent Modeling: In bagging, models are trained autonomously from each other.

- Bias Reduction: Boosting algorithms aim to diminish bias while bagging techniques focus on diminishing variance.

Frequently Asked Questions

Which Algorithm Is Best in Ml?

The best ML algorithms are often application-specific. For regression, linear and logistic regression excel. Classification algorithms like decision trees and SVMs are effective. Clustering algorithms like k-means enhance data analysis outcomes.

Which Algorithm Is Used in Data Analysis?

Decision trees are widely used in data analysis due to their simplicity and interpretability. Supervised learning methods like linear and logistic regression are effective for continuous and binary predictions, respectively, while unsupervised methods like clustering algorithms are ideal for discovery.

What Are Machine Learning Algorithms in Data Analysis?

Machine learning algorithms in data analysis comprise supervised learning techniques like Linear Regression and Logistic Regression, unsupervised clustering methods like K-means, and feature selection strategies such as mutual information or correlation-based methods for predictable insights.

What Are the 10 Machine Learning Algorithms Every Data Scientist Know?

The top 10 machine learning algorithms every data scientist should know include Decision Tree classification, K-nearest neighbors (KNN) for instance-based learning, Support Vector Machines (SVM) for robust classification, and other popular techniques for effective predictive modeling.